Introduction

PSYC 573

2024-08-27

History of Bayesian Statistics

cf. A nice popular science book by Sharon Bertsch McGrayne: The theory that would not die

Historical Figures

Thomas Bayes (1701–1762)

- English Presbyterian minister

- “An Essay towards solving a Problem in the Doctrine of Chances”, edited by Richard Price after Bayes’s death

Pierre-Simon Laplace (1749–1827)

- French Mathematician

- Formalize Bayesian interpretation of probability, and most of the machinery for Bayesian statistics

In the 20th Century

Bayesian is the main way to do statistics until early 1920s

Ronald Fisher and Frequentist scholars took over

“The theory of inverse probability is founded upon an error, and must be wholly rejected” (Fisher, 1925, p. 10) 1

Resurrection

Alan Turing’s algorithms in code breaking in World War II

Markov Chain Monte Carlo (MCMC) algorithms

- Bring Bayesian back to the main stream of statistics

Why Should You Learn About the Bayesian Way?

- Gigerenzer (2004): It is one tool of your statistical toolbox

- Increasingly used as alternative to frequentist statistics

- Computationally more stable for complex models

- A coherent way of incorporating prior information

- Common sense knowledge, previous literature, sequential experiments, etc

- More comprehensive tools for understanding your data and models

Bayesian Ideas

Reallocation of credibility across possibilities

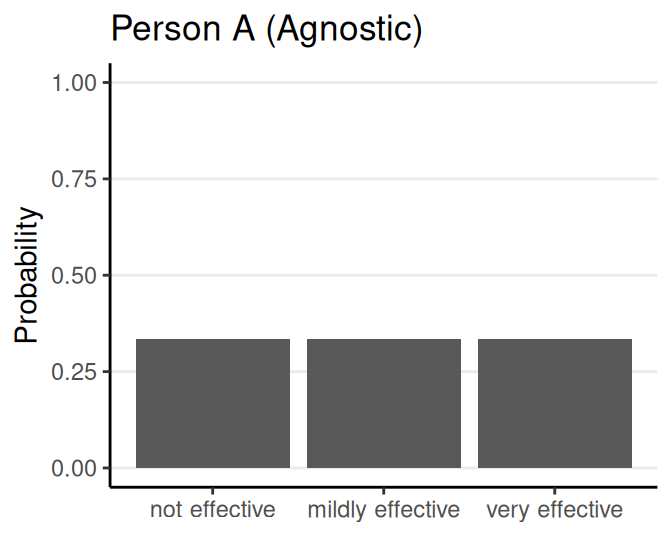

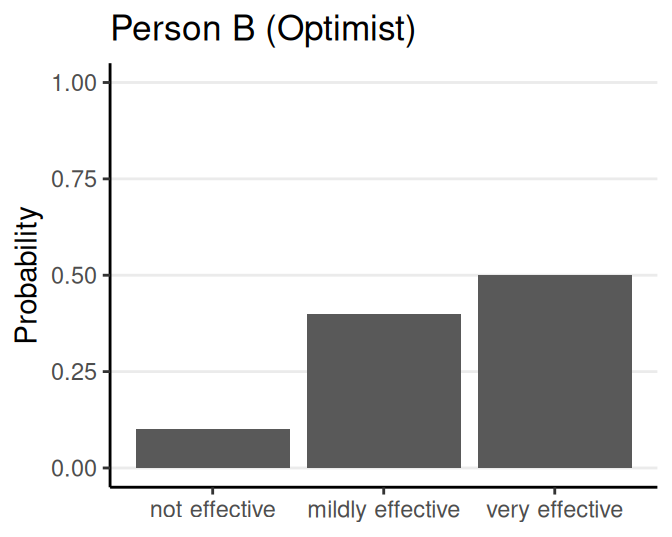

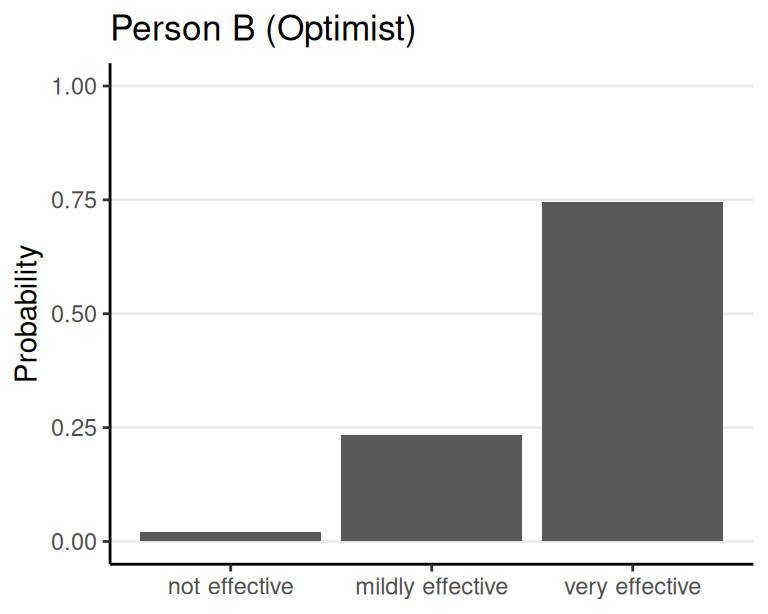

Hypothetical example: How effective is a vaccine?

Prior (before collecting data)

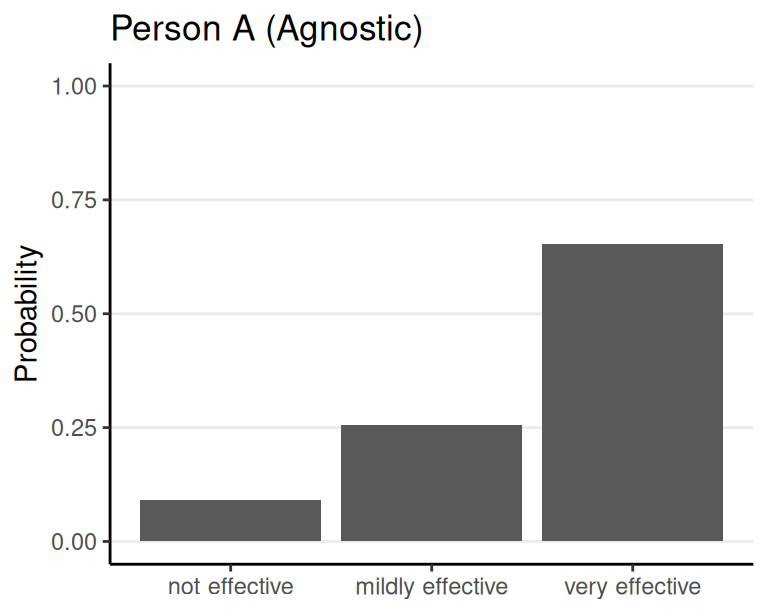

Updating Beliefs

After seeing results of a trial

- 4/5 with the vaccince improved

- 2/5 without the vaccine improved

Possibilities = Parameter Values

A Discrete Parameter

- Parameter: Effectiveness of the vaccine

- Possibilities: Not effective, mildly effective, very effective

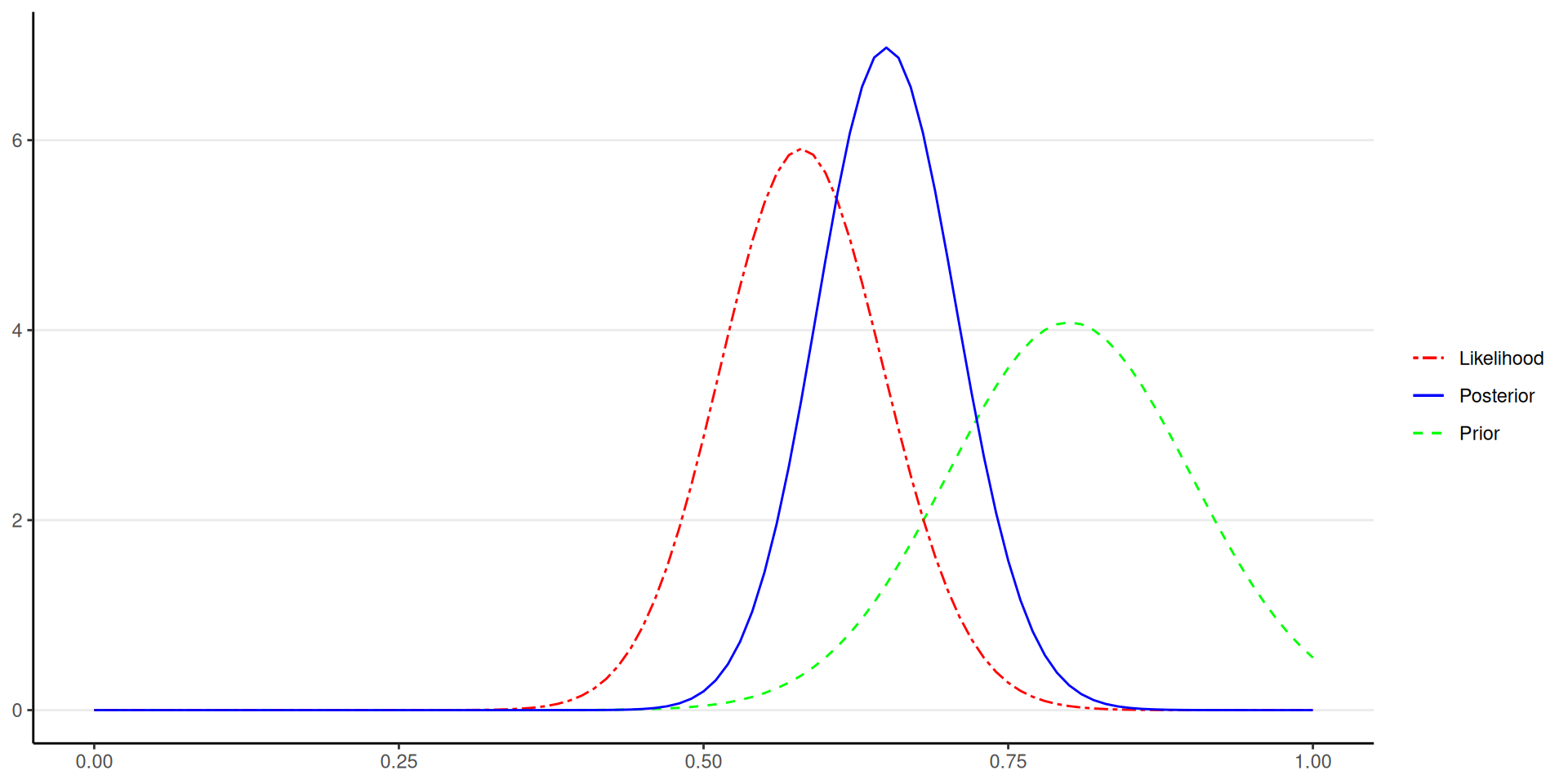

A Continuous Parameter

- Parameter: Risk reduction by taking the vaccine

- Possibilities: \((-\infty, \infty)\) (Any real number)

Using Bayesian analysis, one obtains updated/posterior probability for every possibility of a parameter, given the prior belief and the data

Steps of Bayesian Data Analysis

“Turning the Bayesian crank”

- Define a mathematical model with parameters

- Specify priors on parameters

- Check priors

- Fit model to data

- Check for convergence

- Evaluate the model using posterior predictive check

- Modify the model and repeat 3-6

- Obtain and interpret posterior distributions of the parameters

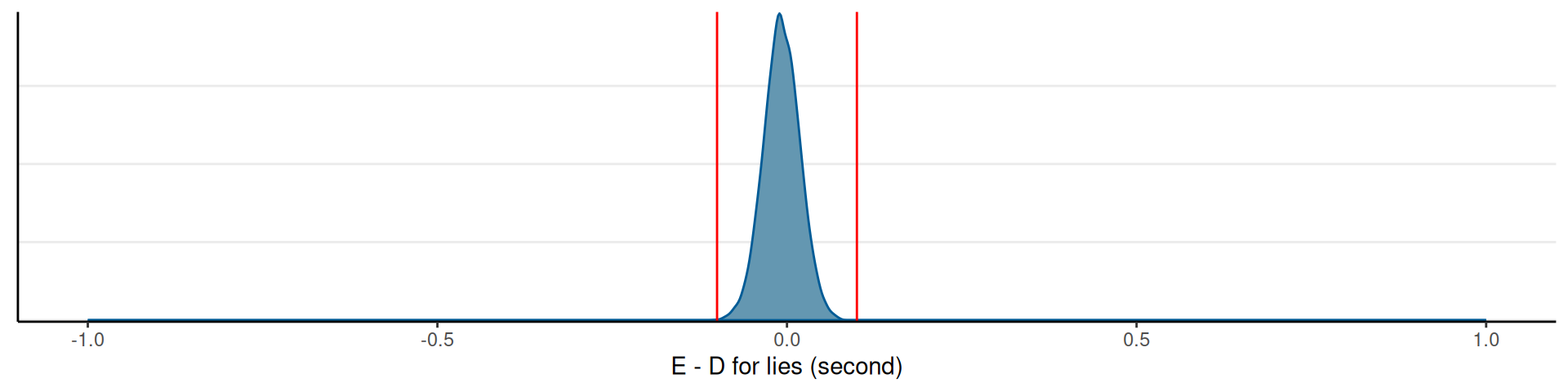

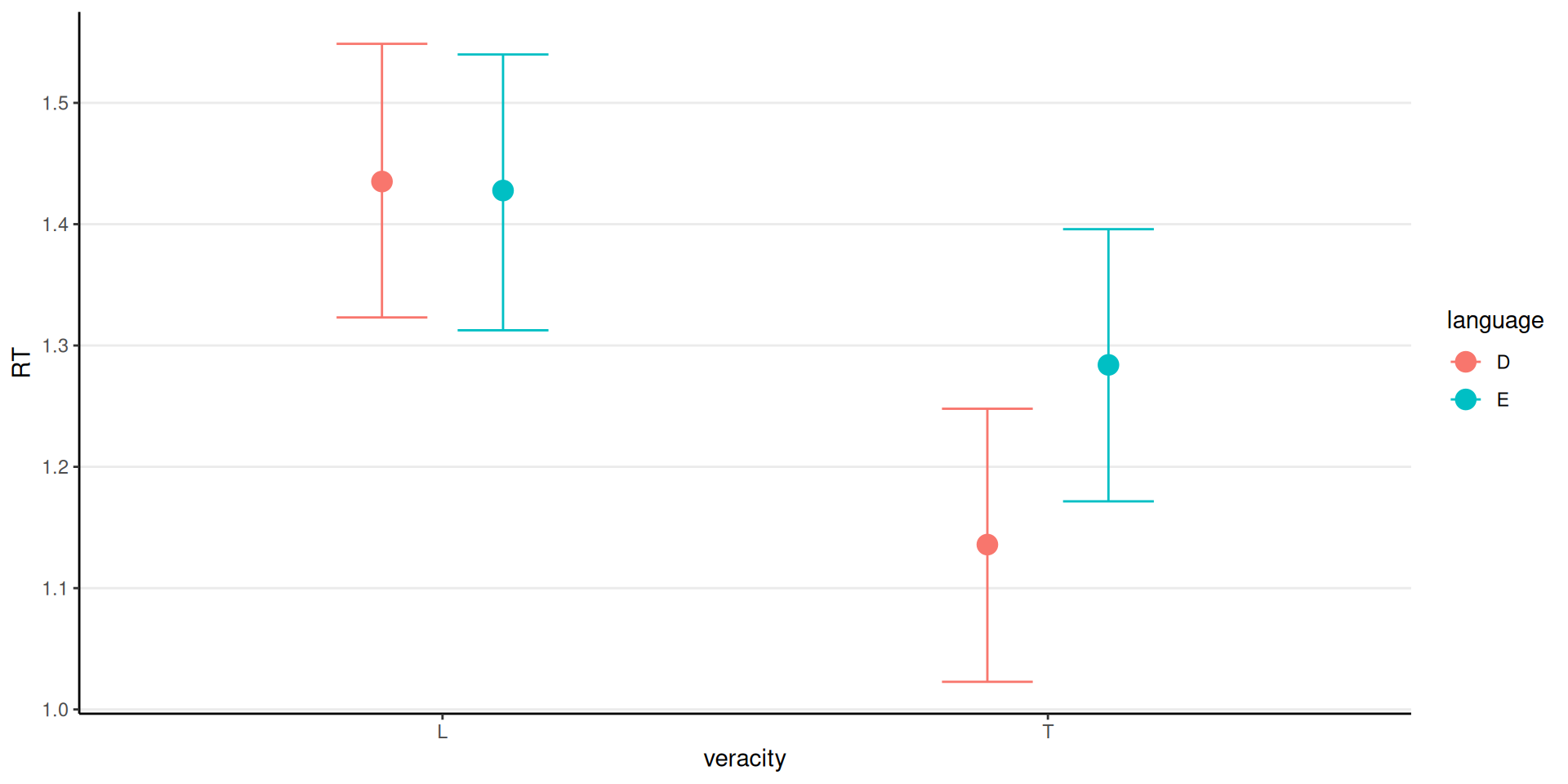

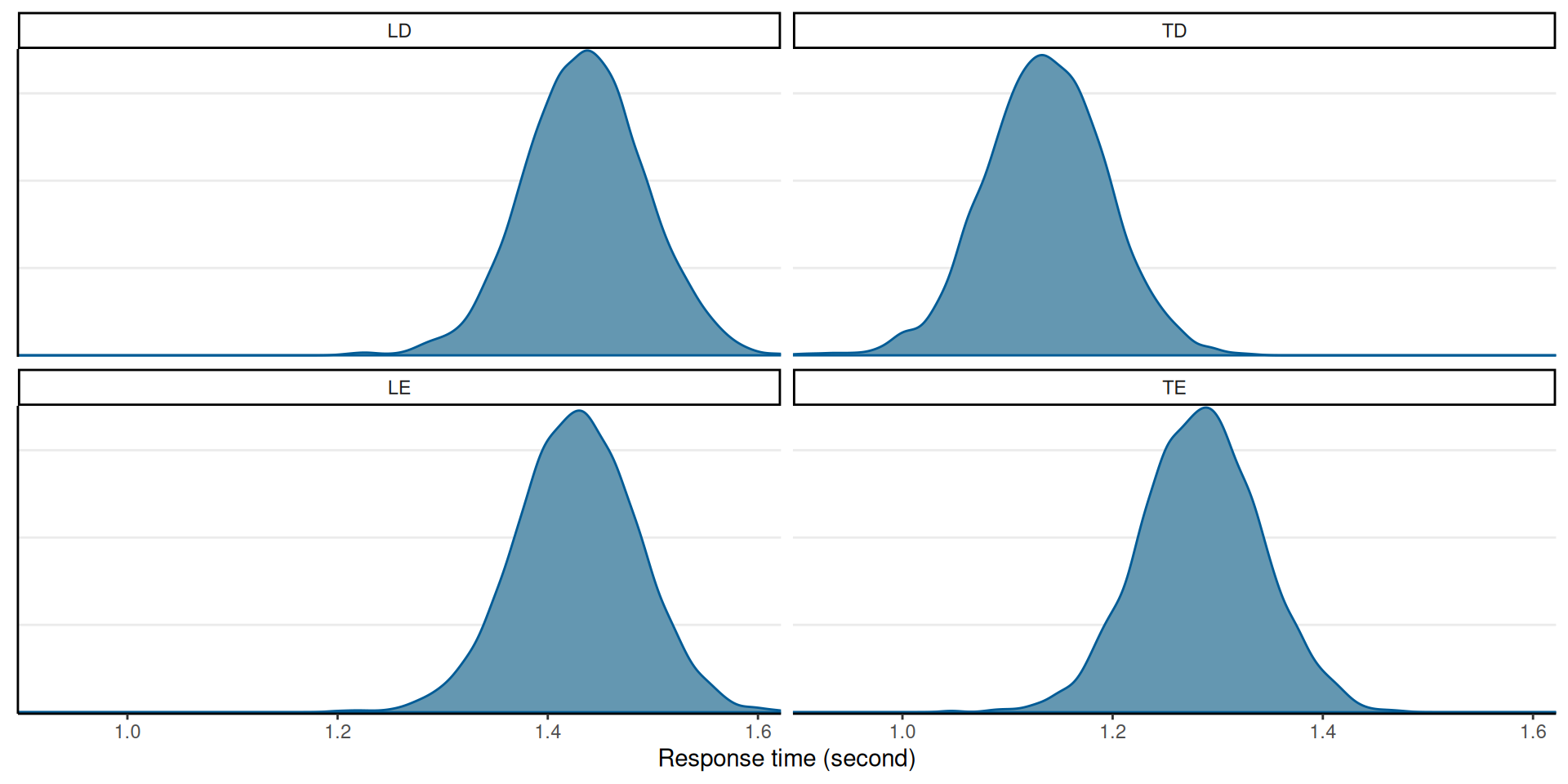

Example: Frank, Biberci, and Verschuere (2019, Cognition and Emotion)

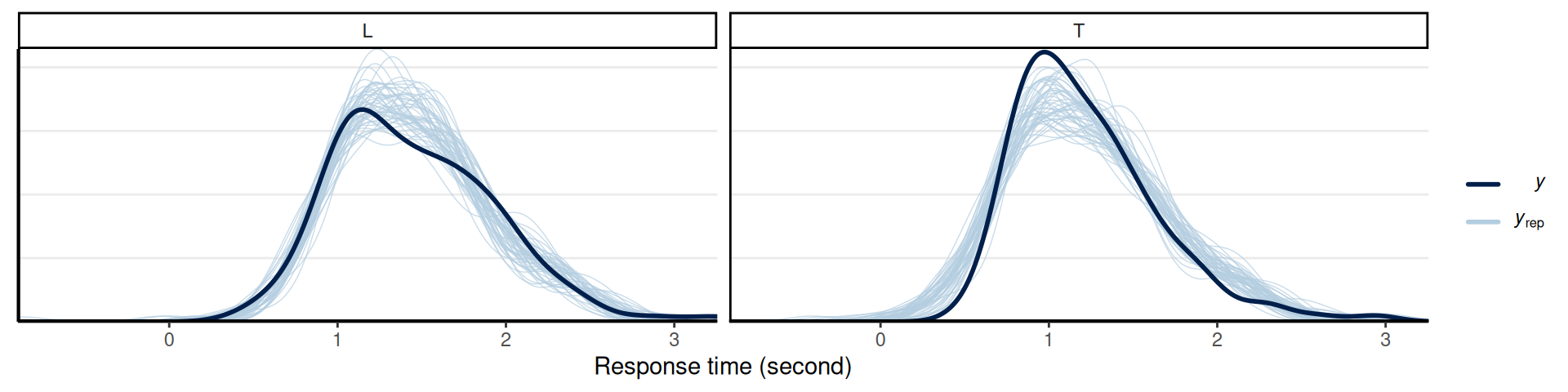

- Response time for 2 (Dutch–native vs. English–foreign) \(\times\) 2 (lie vs. truth) experimental conditions

Posterior of Mean RTs by Conditions

L = Lie, T = Truth; D = Dutch, E = English

Accepting the Null

Posterior Predictive Check

Multiple Experiments

Kay, Nelson, and Hekler (2016), Figure 2