Rows: 1,780

Columns: 5

$ id <int> 202, 202, 202, 202, 202, 202, 202, 202, 202, 202, 204, 204, 204, …

$ day <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 1, …

$ rpa1 <int> 2, 2, 1, 1, 1, NA, 4, 5, 4, 5, 4, 4, 4, NA, NA, 2, NA, NA, 3, 4, …

$ rpa2 <int> 2, 1, 1, 1, 2, NA, 3, 1, 1, 2, 3, 3, 4, NA, NA, 2, NA, NA, 1, 2, …

$ rpa3 <int> 3, 2, 2, 2, 2, NA, 2, 3, 2, 1, 2, 2, 4, NA, NA, 2, NA, NA, 1, 3, …Generalizability Theory

PSYC 520

Learning Objectives

- Provide some example applications of the generalizability theory (G theory)

- Contrast G theory with CTT (Table 10.1)

- Explain the differences between crossed and nested facets, and between random and fixed facets, and between G studies and D studies

- Estimate variance components and the G and \(\phi\) coefficients for two facets designs

Basic Concepts of G theory

Premise: there are multiple sources of error, and typically observed scores only reflect some specific conditions (e.g., one rater, two trials)

Goal: investigate whether observed scores under one set of conditions can be generalized to broader conditions.

Common Applications

- Rating data: Interrater reliability is a special case of G theory.

- Behavioral observations: generalizability across different raters, tasks, occasions, intervals of observations, etc.

- Imaging data: generalizability of scores across different processing decisions, tasks, etc.

Terminology

Facet: sources of error (e.g., raters, tasks, occasions). Each facet can be fixed or random.

Condition: level of a facet

Object of Measurement: usually people, which is not considered a facet. This is always random.

Universe of Admissible Operations (UAO): a broad set of conditions to which the observed scores generalize

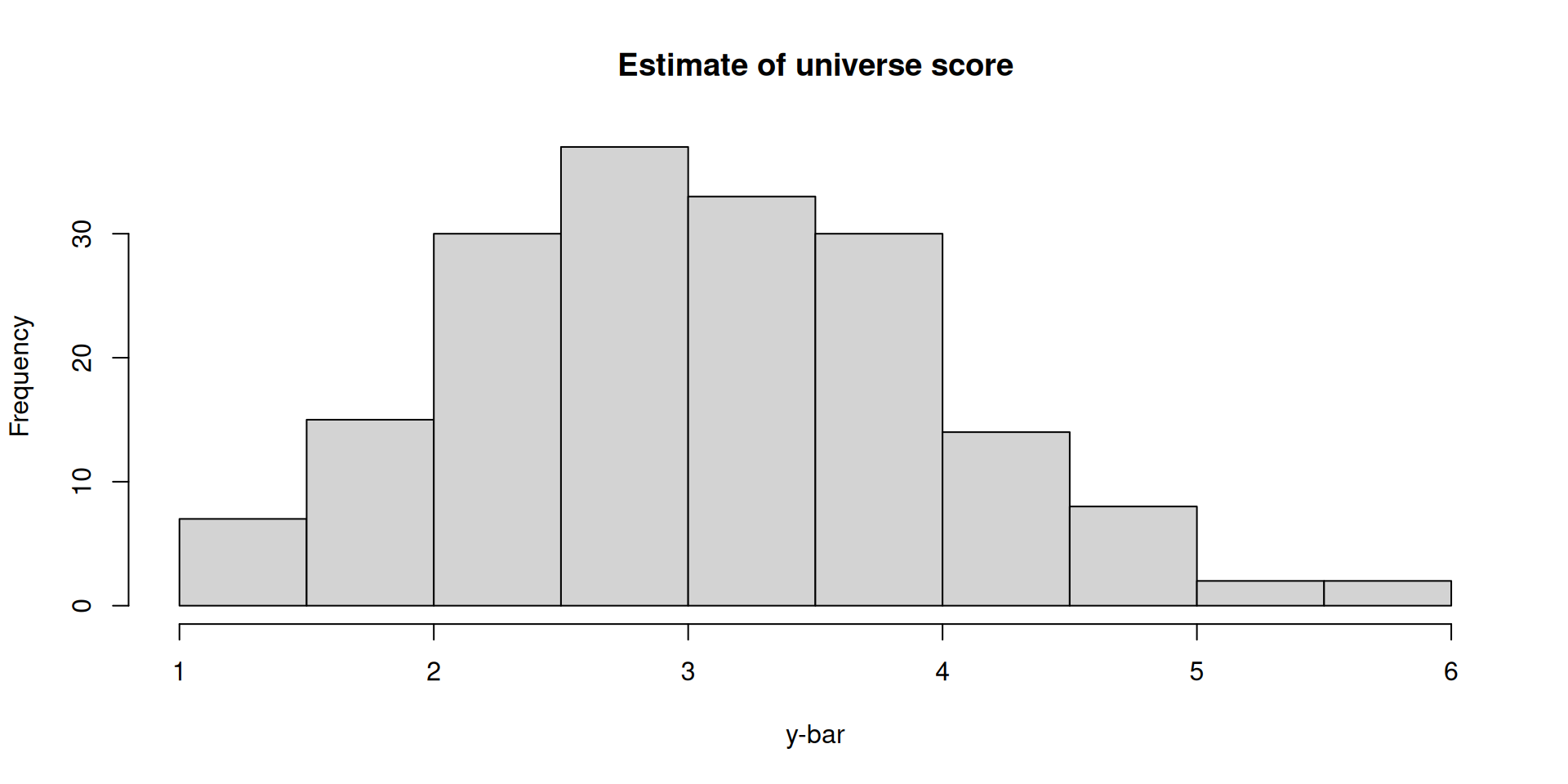

Universerse Score: average score of a person across all possible sets of conditions in the UAO

G Study: obtain accurate information on the magnitude of sources of error

D study: design measurement scenario with the desired level of dependability with the smallest number of conditions

In G theory, by evaluating the degree of error in different sources, we have evidence on whether scores from some admissible conditions are generalizable across conditions. If so, the scores are dependable (or reliable).

One-Facet Design

The example in the previous note with each participant rated by the same set of raters is an example of a one-facet design.

Formula for score of person \(i\) by rater \(j\):

\[ \begin{aligned} Y_{ij} & = \mu & \text{(universe score)} \\ & \quad + (\mu_p - \mu) & \text{(person effect)} \\ & \quad + (\mu_r - \mu) & \text{(rater effect)} \\ & \quad + (Y_{ij} - \mu_p - \mu_r + \mu) & \text{(residual)} \end{aligned} \]

This gives the variance decomposition for three components:

\[\sigma^2(Y_{pr}) = \sigma^2_p + \sigma^2_r + \sigma^2_{pr, e}\]

\(\sigma^2_p\) is person variance, or universe score variance.

Model of analysis: two-way random-effect ANOVA, aka random-effect model, variance component models, or multilevel models with crossed random effects.

Two-Facet Design

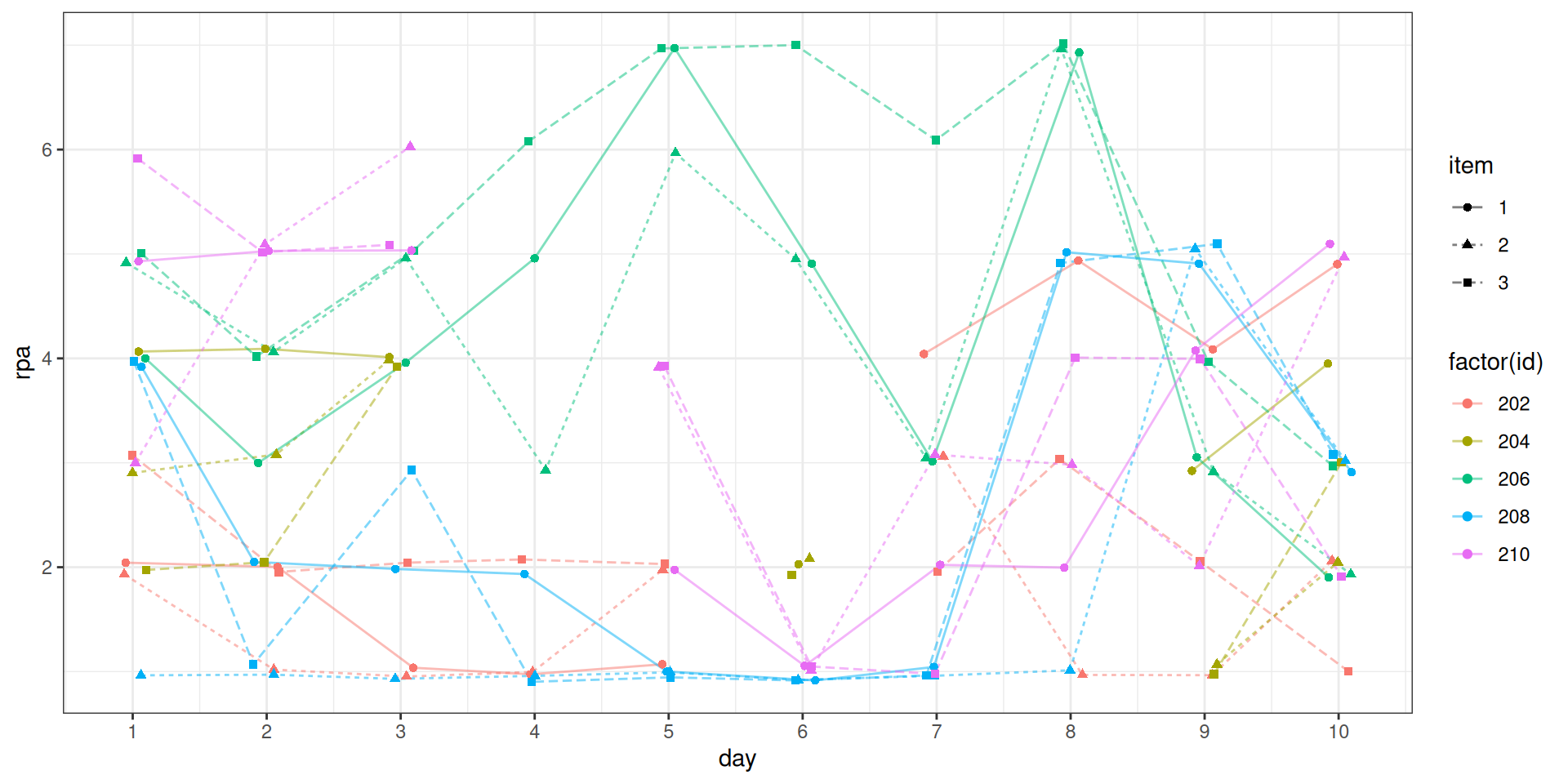

The first example comes from a daily diary study on daily rumination.

n = 178, T = 10 days

rpa1: I often thought of how good I felt today.rpa2: I often thought of how strong I felt today.rpa3: I often thought today that I would achieve everything.

Data Transformation

The data are in a typical wide format.

Wide Format

- Each row represents a person

- The other facets (rater, task) are embedded in the columns

Long Format

- Each row represents an observation (repeated measure)

- Each facet (rater, task) has its own column

rpa_long <- rpa_dat |>

select(id, day, rpa1:rpa3) |>

pivot_longer(

# select all columns, except the 1st one to be transformed

cols = rpa1:rpa3,

# The columns have a pattern "rpa(d)", where values

# in parentheses are the IDs for the facet. So we first

# specify this pattern, with a "." meaning a one-digit

# place holder,

names_pattern = "rpa(.)",

# and then specify that the place holder is for item.

names_to = c("item"),

values_to = "rpa" # name of score variable

)

head(rpa_long)# A tibble: 6 × 4

id day item rpa

<int> <int> <chr> <int>

1 202 1 1 2

2 202 1 2 2

3 202 1 3 3

4 202 2 1 2

5 202 2 2 1

6 202 2 3 2As can be seen, the data are now in a long format.

Nested vs. Crossed

Crossed Design

See Example 2 in the other note

Nested Design

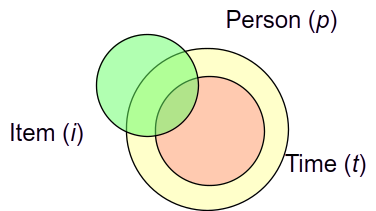

The data here has each participant (p) answering same three items (i) on 10 days (t). However, the participants do not share the same 10 calendar days or days of week, so unless one is interested in day of study effect, one would consider day 2 of Participant A to be different from day 2 of Participant B. Therefore, this is a (t:p) \(\times\) i design, where the two facets are nested.

When facet A is nested in facet B (i.e., a:b), each level of A is associated with only one level of B, but each level of B is associated with multiple levels of A.

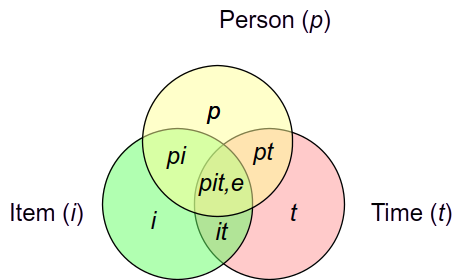

Variance Decomposition

With a two-facet design, we have the following variance components:

- Person

- Facet A

- Facet B

- Person \(\times\) A

- Person \(\times\) B

- A \(\times\) B

- Person \(\times\) A \(\times\) B*

- Error*

With a nested design, one cannot estimate the t:i interaction, and the main effect of t and the t:p interaction cannot be separated (See Table 10.6).

| source | var | percent |

|---|---|---|

| day:id | 0.87 | 0.32 |

| id:item | 0.39 | 0.14 |

| id | 0.58 | 0.21 |

| item | 0.07 | 0.02 |

| Residual | 0.84 | 0.31 |

Bootstrap Standard Errors and Confidence Intervals

See Part 2 of the notes.

Interpreting the Variance Components

Venn diagrams

Standard deviation

E.g., The ratings are on a 7-point scale. With \(\hat \sigma_{pr}\) = 0.622, this is the margin of error due to person-by-item interaction.

Fixed vs. Random

Generally G theory treats conditions of a facet as random, meaning they are regarded as a random sample from a population collection of samples. However, if such an assumption does not make sense, such as when people are always going to be evaluated on the same tasks, and there is no intention to generalize beyond those tasks, the task facet should be treated as fixed. Then there are two options:

If it makes sense to average the different conditions in a fixed facet (e.g., average score across tasks), follow the code below.

Otherwise, perform a separate G study for each condition of the fixed facet.

If treating item as fixed, the person \(\times\) item variance will be averaged and become part of the universe score

The residual will be averaged and become part of person \(\times\) time variance

source var percent

1 id 0.7126277 0.3823251

2 day:id 1.1513035 0.6176749Relative vs. Absolute Decisions

- Error for relative: anything that involves person, including the residual

- \(\sigma^2_{pi}\) + \(\sigma^2_{pt}\) + \(\sigma^2_{pit, e}\)

- Error for absolute: every term other than person

- \(\sigma^2_i\) + \(\sigma^2_t\) + \(\sigma^2_{pi}\) + \(\sigma^2_{pt}\) + \(\sigma^2_{it}\) + \(\sigma^2_{pit, e}\)

D Studies

Decision studies: Based on the results of G studies, try to minimize error as much as possible.

- Find out how many conditions can be used to optimize generalizability

- Similar to using the Spearman-Brown prophecy formula, but consider multiple sources of errors

G and \(\phi\) Coefficients

G coefficient: For relative decisions

- Only include sources of variation that would change relative standing as error (\(\sigma^2_\text{REL}\))

- i.e., interaction terms that involve persons

\[ G = \frac{\hat \sigma^2_p}{\hat \sigma^2_p + \hat \sigma^2_\text{REL}} \]

\(\phi\) coefficient: For absolute decisions

- Include all sources of variation, except for the one due to persons, as error (\(\sigma^2_\text{ABS}\))

\[ \phi = \frac{\hat \sigma^2_p}{\hat \sigma^2_p + \hat \sigma^2_\text{ABS}} \]

These are analogous to (but not the same as) reliability coefficients.

g phi

0.7052649 0.6867227 See Cranford et al. (2006) for similar discussion of generalizability coefficients in longitudinal data.

However, for reliability more comparable to \(\alpha\) and \(\omega\) reliability coefficients, see Lai (2021).

Note on Notation

The caret (^) symbol in \(\hat \sigma^2\) indicate that it is an estimate from the sample.

In D studies, we typically use \(n'\) to represent number of conditions for a facet to be used when designing the study. For example, with the two-facet crossed design with raters and tasks,

\[ \hat \sigma^2_\text{REL} = \frac{\sigma^2_{pr}}{n'_r} + \frac{\sigma^2_{pt}}{n'_t} + \frac{\sigma^2_{prt, e}}{n'_r n'_t} \]